Pioneering AI-Driven Robotics for Human Augmentation, Healthcare, and Manufacturing

Human Robot Interaction

Augmenting Human Dexterity through Contactless Human-Robot Interaction

Collaborative robots are increasingly used in high-risk environments where humans provide high-level decision-making and robots carry out physical tasks requiring strength and precision. Achieving smooth, reliable, and dexterous interaction, however, remains a challenge. We developed a vision-based framework for contactless control of a Universal Robots arm that progressed from gesture recognition, to 2D hand tracking, and ultimately to 3D motion feedback with dual cameras. By integrating spherical linear interpolation (slerp), the system achieved more natural and consistent orientation control compared to traditional methods. This work demonstrates how intuitive, vision-driven interfaces can expand human dexterity in collaborative robotics and paves the way for future multimodal systems combining IMU, EMG, and AI-assisted input.

|Publication| |Video|

Vision-Driven Language Reasoning for Adaptive Robotic Arms in Unstructured Human–Robot Collaboration

Industrial robots excel at repetitive tasks in structured environments but struggle when faced with dynamic, unpredictable settings. We developed a Universal Robots UR10e platform with an Intel RealSense depth camera and GPT-4 integration, enabling the robot to perceive its surroundings, interpret natural language instructions, and reason about tasks. The system successfully performs several tasks without relying on predefined object positions. By merging vision, reasoning, and language, this work lowers barriers to deploying robots in unstructured environments and opens pathways to assistive applications in daily life.

|Publication| |Video|

Robo-Brush: Autonomous Toothbrushing with Deep Reinforcement Learning and Learning from Demonstration

Oral hygiene is a daily necessity, yet for individuals with limited mobility, toothbrushing can be difficult or impossible without assistance. Robo-Brush addresses this challenge by combining Deep Reinforcement Learning (DRL) with Learning from Demonstration (LfD) to teach a robotic arm how to brush teeth both thoroughly and safely. Using a high-fidelity MuJoCo simulation with anatomically detailed dental models, the system learned human-like brushing strategies that balanced coverage with gentle force. Results showed that adding just a small amount of demonstration data improved brushing coverage by over 6%, while also producing smoother, more natural motions. This work highlights the potential of intelligent, learning-enabled robots to take on delicate personal care tasks in assistive healthcare.

|Publication| |Video|

Imitation Learning-Based Adaptive Control for a Quasi-Serial Rehabilitation Robot Using Behavior Cloning

Rehabilitation robots must provide safe, adaptive support that adjusts to each patient’s unpredictable movements. Traditional controllers like PID offer precision but lack flexibility, while model-based approaches such as MPC require detailed patient models that are impractical in clinical settings. To address this gap, we developed a quasi-serial rehabilitation robot—combining serial and parallel linkages for compliant, back-drivable motion—controlled by a behavior cloning (BC) policy. Trained on synthetic demonstrations from PID and MPC-inspired strategies, the BC controller learned to deliver smooth, human-like assistance without hand-tuned parameters or complex models. Compared to PID, it achieved higher trajectory accuracy, significantly reduced jerk, and maintained more stable, consistent assistance. This approach highlights how imitation learning can enable scalable, personalized, and clinically relevant robot-assisted therapy.

|Publication| |Video|

Optimizing Human–Robot Teaming Performance through Q-Learning-Based Task Load Adjustment and Physiological Data Analysis

Human–robot teaming holds great promise for Industry 5.0, where human adaptability is combined with robotic precision. Yet performance often suffers when humans become either disengaged by low task load or stressed by excessive demands. To address this, we developed a framework that predicts and optimizes team performance using physiological signals such as heart rate, electrodermal activity, and motion data. By integrating Q-Learning, the system learns how different aspects of task load influence performance and dynamically adjusts the robot’s speed to keep humans in the optimal engagement zone. Machine learning models achieved 95% accuracy in forecasting human performance, enabling proactive interventions that reduce errors and sustain productivity.

|Publication| |Video|

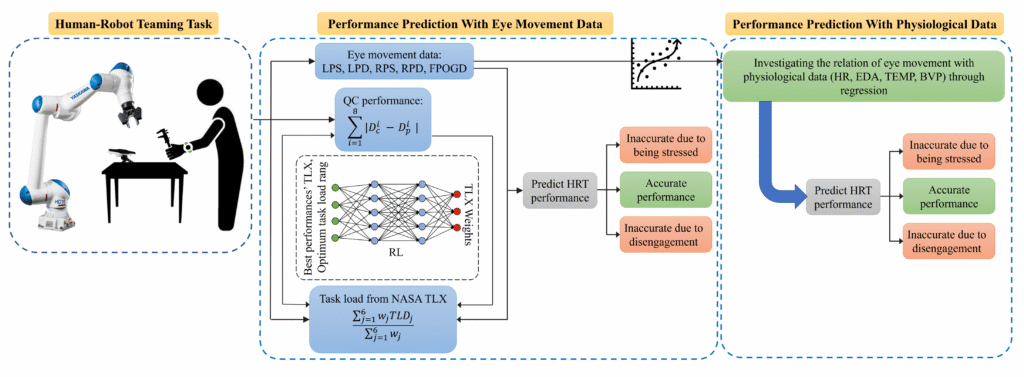

A framework for human-robot teaming performance prediction: Reinforcement learning and eye movement analysis

Human–robot teaming succeeds only when humans remain engaged and supported at the right workload—too little leads to disengagement, too much creates stress. To address this challenge, we designed a predictive framework that combines reinforcement learning with eye movement analysis to forecast human–robot team performance in real time. Using a collaborative quality control task, we captured pupillometry, gaze, and physiological signals while participants worked alongside a Yaskawa robot. Reinforcement learning was used to optimize task load weights from NASA TLX surveys, and machine learning models trained on eye-tracking features achieved nearly 97% accuracy in predicting performance. Importantly, the study also showed that physiological signals such as heart rate and skin conductance can serve as practical alternatives to eye-tracking data, enabling more flexible deployment in industrial environments.

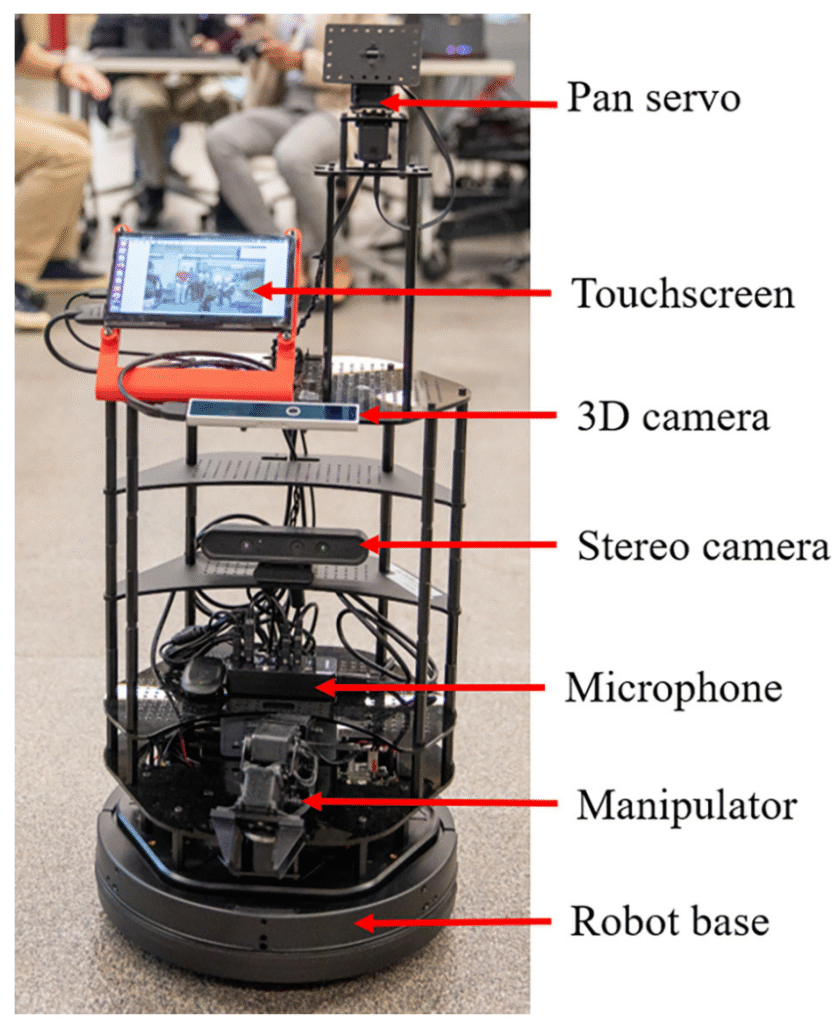

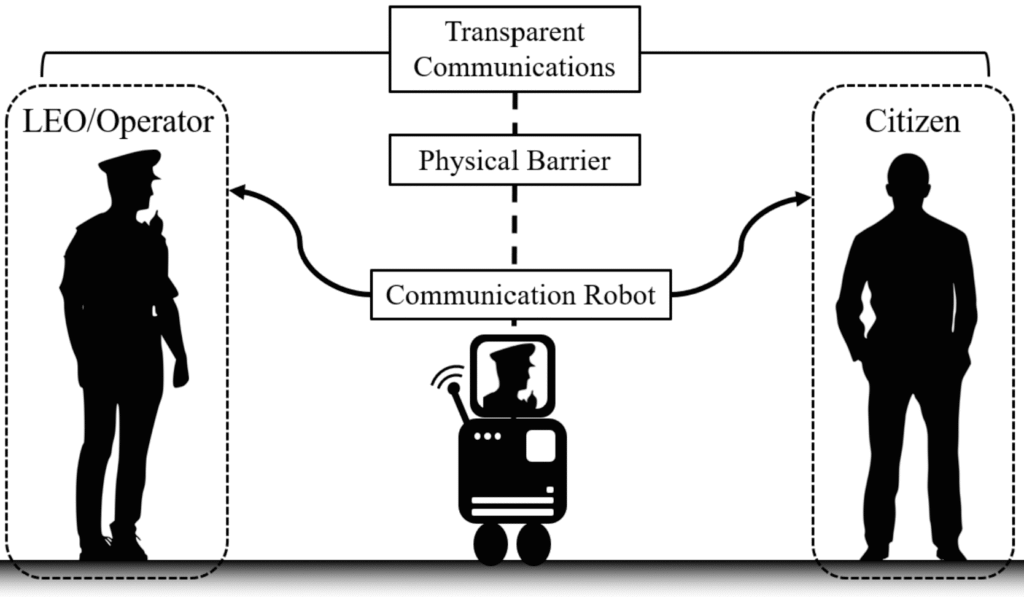

Teleoperated Communication Robot: A Law Enforcement Perspective

Police officers often operate in high-risk situations where maintaining distance, gathering information, and building trust can mean the difference between escalation and safety. To explore how robotics might assist, we developed a teleoperated communication robot built on a Turtlebot 2i base with cameras, audio, and a touchscreen for two-way interaction. In collaboration with four law enforcement agencies in Alabama, officers trained with and piloted the robot, then shared their perspectives through pre- and post-surveys. The findings showed strong interest in features such as portability, terrain capability, long battery life, and 360° camera views, while humanoid appearance and emotional expression were less critical. Officers emphasized ease of operation and user-centered design as essential for trust and acceptance. This work demonstrates the potential of communication robots to support officers during traffic stops, crowd management, negotiations, and wellness checks—providing time, space, and safer pathways for dialogue between law enforcement and the public.

Supported by the National Science Foundation (NSF Award #2026658)

Exploring law enforcement officers’ expectations and attitudes about communication robots in police work

Robots have long been used in policing for bomb disposal and surveillance, but their potential role as communication partners is only beginning to be explored. In this study, law enforcement officers from multiple Alabama agencies shared their expectations and concerns about communication robots through questionnaires and focus groups. While many officers expressed skepticism—citing risks of malfunction, hacking, or loss of human rapport—they also recognized the potential of robots to act as force multipliers, enabling safer communication in high-risk encounters and extending capabilities beyond human limits. Key barriers included cost, training requirements, ethical concerns, and public acceptance. The findings point to the importance of improved design, officer training, and community education to build trust and ensure safe, effective adoption.

Supported by the National Science Foundation (NSF Award #2026658).

Controlling Robot with Eye Gaze: Real-Time Human–Robot Interaction

This proof-of-concept project explored how eye-tracking can serve as a natural, non-contact interface for human–robot interaction. Using a real-time gaze tracking system, participants viewed a monitor displaying two objects—a water bottle and a hand sanitizer. When the participant looked at one of the objects, a Sawyer collaborative robot autonomously retrieved it. The project demonstrated how gaze alone can be used to command a robot in real time, creating an intuitive form of control without speech or physical input. While not developed into a full research study, this experiment highlights the potential of gaze-based interaction to support assistive applications, where individuals with limited mobility could control robots seamlessly using only their eyes.

|Video|

Online object detection

To enable collaborative robots to understand and adapt to their environment, I developed a real-time object detection system in Python using the Faster R-CNN algorithm. The system allowed a robot to identify and distinguish between common lab objects directly from camera input, demonstrating how perception can enhance autonomy in shared workspaces. While computationally intensive—running on an older machine at 100% CPU, leading to noticeable delays—the system successfully recognized tools and objects in real time. A playful moment of the demo highlighted both the power and fragility of visual AI: a screw attached to a U-shaped clamp was misclassified as a micrometer, underscoring how easily vision models can be deceived. This project illustrates both the promise of deep learning for robot perception and the practical challenges of real-time deployment in resource-limited conditions.

|Video|

AI in Rehabilitation

Real-Time Rehabilitation Tracking by Integration of Computer Vision and Virtual Hand

Accurate tracking of hand rehabilitation progress is often limited to costly clinical equipment and in-person assessments. To make this process more accessible, we developed a framework that combines computer vision and physics-based simulation to estimate finger joint dynamics in real time. Using a single camera, MediaPipe extracts hand landmarks, which are mapped to the MANO hand model in PyBullet. A PID controller, optimized with genetic algorithms, computes the torques at the MCP and PIP joints, enabling precise tracking of finger movement and force generation. Validation against experimental exoskeleton data confirmed high accuracy, with torque errors as low as 10⁻⁵ (N·m)². By quantifying hand joint dynamics through vision alone, this approach provides a scalable path for tele-rehabilitation, prosthetic design, and adaptive robotic hand control, helping bridge the gap between clinical precision and at-home accessibility.

|Publication| |Video|

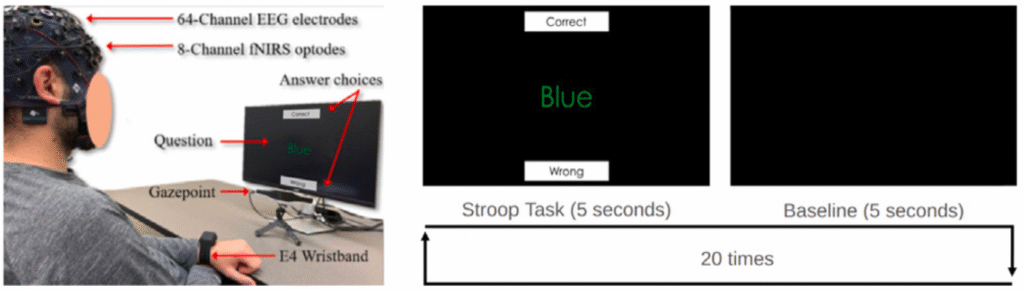

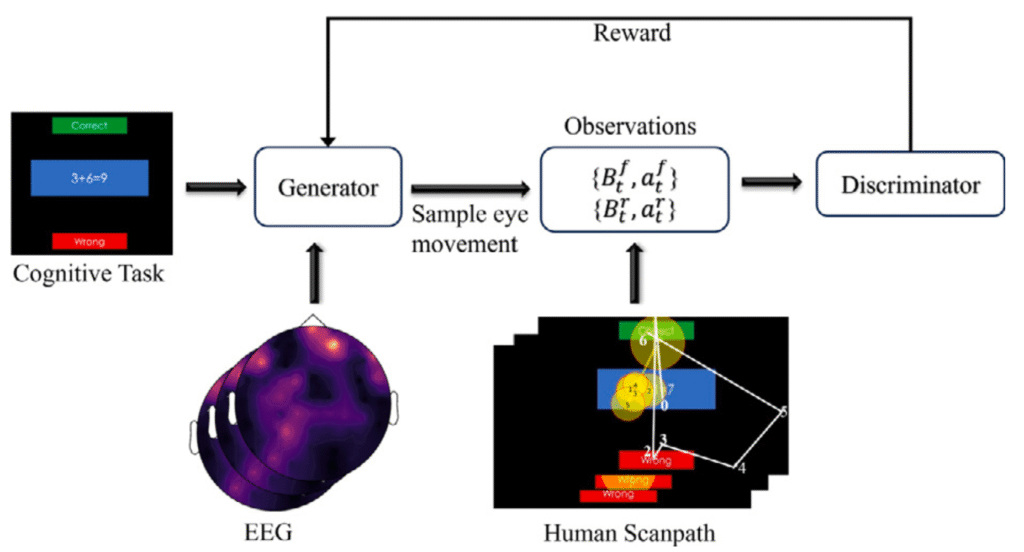

Transforming Stroop Task Cognitive Assessments with Multimodal Inverse Reinforcement Learning

The Stroop test has long been a benchmark for studying attention and cognitive control, yet traditional analyses treat behavior and neural signals separately, limiting their diagnostic power. In this work, we developed a framework that integrates eye-tracking and EEG data through Inverse Reinforcement Learning (IRL) to uncover how people allocate attention under congruent and incongruent Stroop conditions. By modeling gaze as a sequence of reward-driven actions and incorporating EEG features, the system captured hidden strategies behind scanpaths and revealed task-specific brain activation patterns. The IRL-EEG model aligned more closely with human attention than image-only baselines, showing improved prediction of fixation timing, sequence, and direction—especially under high-conflict trials. These results highlight the promise of multimodal IRL for personalized cognitive assessment and point toward new tools for the early detection of neurodegenerative disorders, where subtle deficits in attention and control may first appear.

Reconstructing Human Gaze Behavior from EEG Using Inverse Reinforcement Learning

Understanding how the brain drives eye movements is essential for both cognitive neuroscience and early detection of neurodegenerative disorders. In this study, we introduced an inverse reinforcement learning (IRL) framework that integrates EEG signals with image data to reconstruct human gaze behavior during arithmetic tasks of varying difficulty. By modeling gaze as a sequence of reward-driven actions, the IRL-EEG system was able to predict scanpaths that closely matched real human fixation patterns, outperforming image-only baselines. The results showed that EEG features not only improved accuracy but also captured individual differences in how participants approached complex problems. This approach provides new insight into the link between brain activity and visual attention and lays the groundwork for diagnostic tools and personalized interventions in conditions such as Alzheimer’s and Parkinson’s disease.

Inertia-Constrained Reinforcement Learning to Enhance Human Motor Control Modeling

Accurately modeling human locomotion remains a challenge because reinforcement learning simulations often diverge from natural movement. To address this, we integrated inertial measurement unit (IMU) data into the reward function of reinforcement learning agents. Using a single pelvis-mounted IMU as bio-inspired guidance, our framework constrained simulations of musculoskeletal walking models in OpenSim. Compared to standard RL approaches, this method achieved faster convergence, higher rewards, and more human-like gait trajectories, with straighter walking paths and reduced error in roll, pitch, and yaw. By grounding simulations in real sensor data, this approach provides a powerful tool for studying neuromechanics, diagnosing locomotor disorders, and designing personalized rehabilitation strategies.

|Publication| |Video|

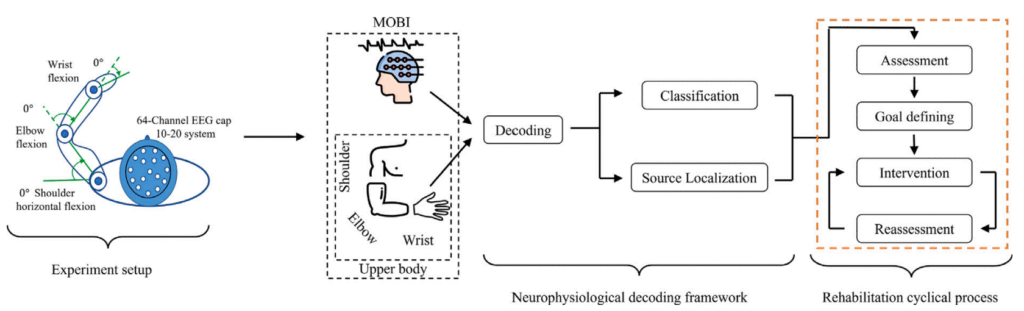

Toward Personalized Rehabilitation Employing Classification, Localization, and Visualization of Brain–Arm Movement Relationships

Stroke rehabilitation is most effective when tailored to each patient’s unique brain–movement patterns, yet most current approaches rely on generalized assessments. In this study, we developed a computational framework that integrates EEG-based classification, source localization, and visualization to decode brain activity during arm movements. Using high-density EEG, we analyzed wrist, elbow, and shoulder motions, applying machine learning to classify movement type with over 95% accuracy and source localization (sLORETA) to identify the cortical regions consistently engaged in each task. The results revealed distinct, spatially organized brain activation patterns associated with specific joint movements, providing clinically meaningful insights into how the brain generates motor commands. By linking classification accuracy with cortical localization, this framework demonstrates the potential for personalized, data-driven rehabilitation strategies that adapt therapy to each patient’s neurological profile.

Supported by the National Institute on Disability, Independent Living, and Rehabilitation Research (NIDILRR), Award #90REMM0001.

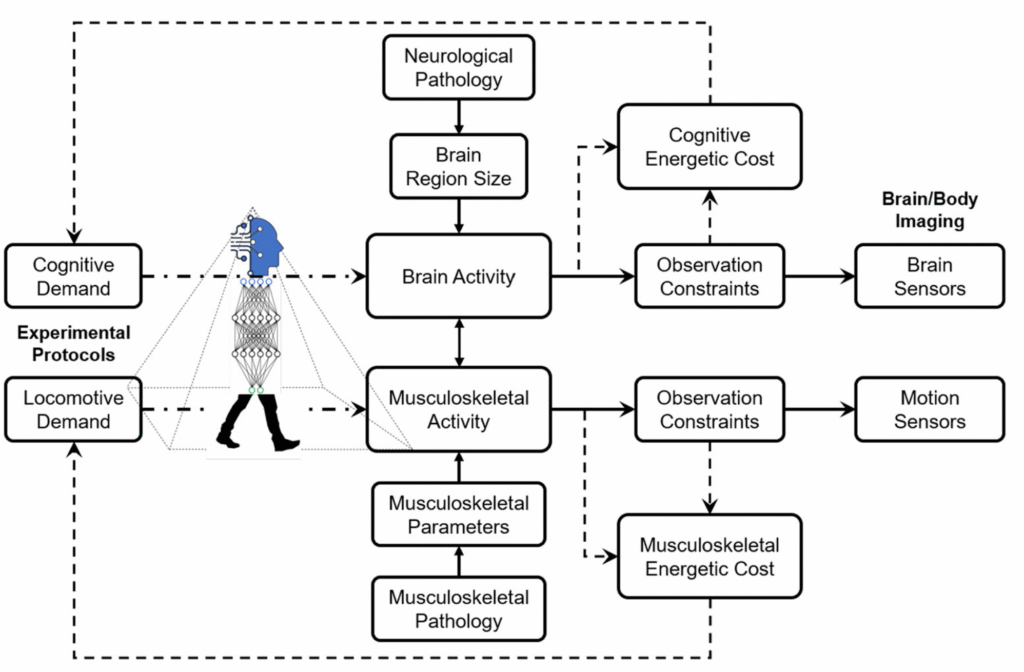

Experiment protocols for brain-body imaging of locomotion: A systematic review

Studying human locomotion requires capturing not only muscle activity but also the brain’s role in coordinating movement. Yet reproducing such experiments has been difficult due to the lack of standardized protocols. In this systematic review, we examined over 100 neuroimaging studies of locomotion, comparing tasks such as walking, running, cycling, and dual-task walking alongside the brain and body sensors used to record them. The findings show that EEG is the most widely adopted tool for detecting brain activity during locomotion, while walking remains the most common task studied. In contrast, running has received little attention, and dual-task walking has been particularly valuable for revealing the cognitive demands of movement. The review highlights critical gaps in protocol design, from handling motion artifacts to balancing cognitive and locomotive demands, and proposes standards to guide future experiments. By outlining these methodological considerations, this work provides a roadmap for reliable, reproducible brain–body imaging of locomotion that can inform both clinical applications and digital human modeling.

A comprehensive decoding of cognitive load

Understanding how the brain and body respond to different levels of mental demand is critical for both clinical diagnosis and human–robot interaction. In this project, we developed a multimodal framework that combined high-resolution EEG, eye-tracking, and physiological sensing (EDA) to decode cognitive load under controlled arithmetic tasks. The study revealed that eye movement, particularly pupil diameter variation, is a dynamic readout of cognitive processes but is influenced jointly by brain activity and skin conductance. Source localization showed that as task difficulty increased, the frontal lobe became more engaged, while machine learning models trained on multimodal features achieved over 98% accuracy in predicting cognitive load levels. By offering a holistic view of neurophysiological activation, this work provides new insights into brain–body dynamics and establishes a foundation for applications ranging from early diagnosis of neurodegenerative diseases to adaptive human–robot collaboration.

Supported by the National Institute on Disability, Independent Living, and Rehabilitation Research (NIDILRR), Award #90REMM0001.

Vibration and Control

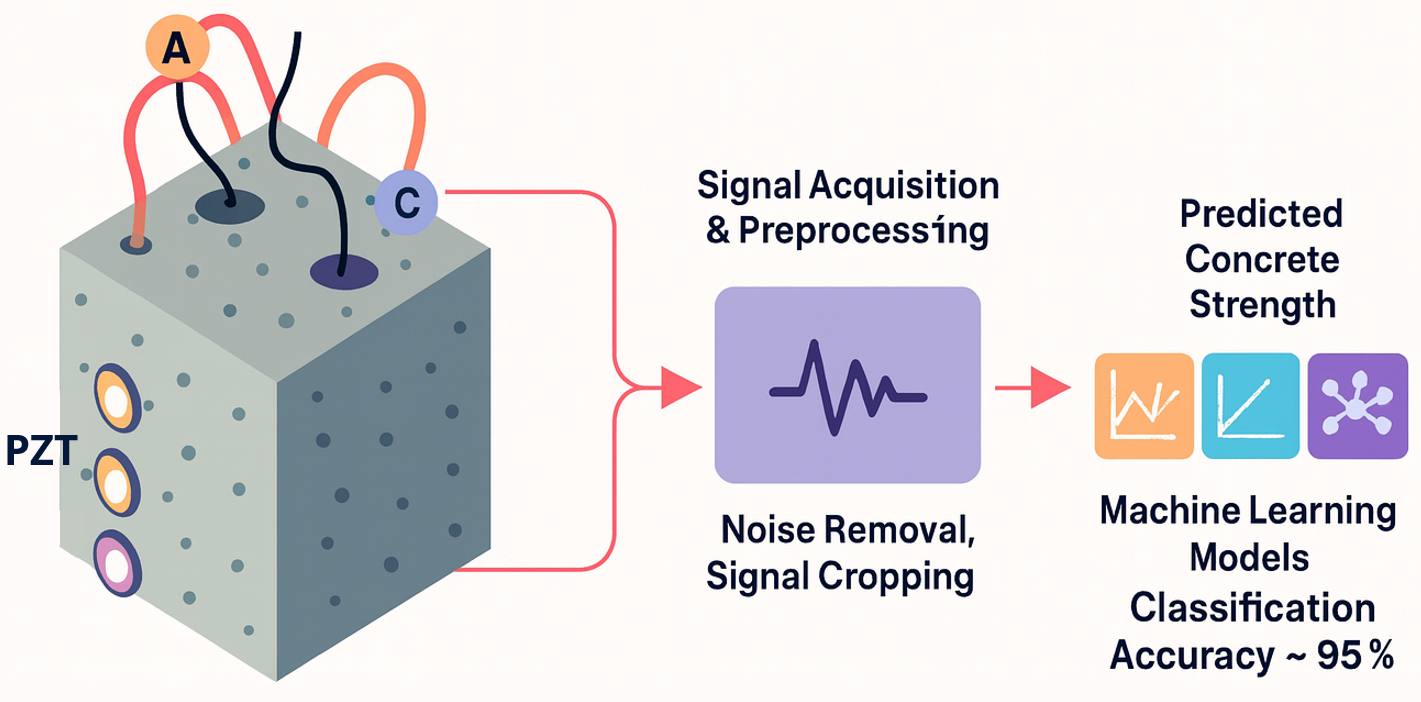

AI-Powered Structural Health Monitoring Using Multi-Type and Multi-Position PZT Networks

Accurately assessing the early-age strength of concrete is vital for safe and efficient construction, yet traditional non-destructive tests remain limited. We developed an AI-enhanced monitoring framework that integrates multiple types and positions of PZT sensors to capture stress-wave responses during curing. Machine learning models trained on these signals predicted compressive strength with up to 95% accuracy, far outperforming single-sensor methods. This approach enables reliable, real-time strength estimation for safer formwork removal and scheduling, while also laying the groundwork for long-term structural health monitoring of smart infrastructure.

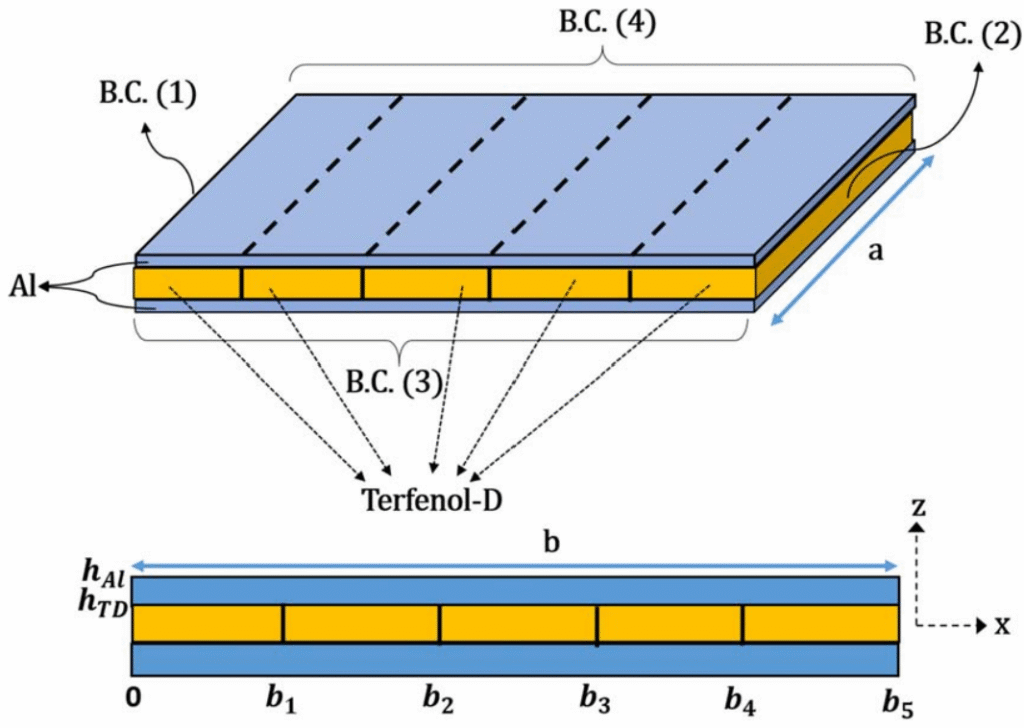

Band Gap and Stiffness Tuning of a Sandwich Plate by Magnetostrictive Materials

Vibrations near structural resonances can cause severe instability or even failure, making frequency control essential in engineering systems. In this work, we designed a sandwich plate with a magnetostrictive core whose stiffness can be actively tuned with magnetic fields. By dividing the magnetostrictive layer into multiple controllable sections, the plate’s natural frequencies could be shifted away from dangerous resonances, effectively reducing vibration amplitudes. Finite element simulations validated the analytical model and further revealed that selective activation of magnetostrictive sections can create periodic stiffness patterns that generate vibration band gaps, filtering out harmful oscillations. This approach demonstrates how magnetostrictive materials enable smart structures with tunable frequency response, paving the way for adaptive vibration suppression and noise isolation in aerospace, automotive, and civil engineering applications.

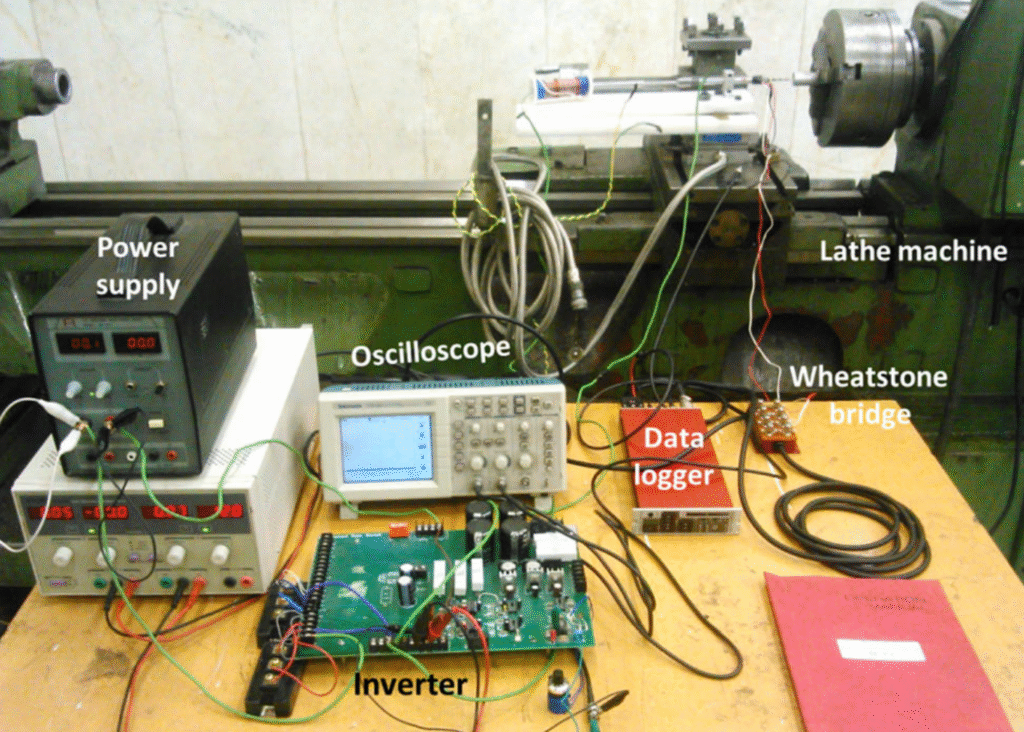

Design And Fabrication of A Novel Vibration-Assisted Drilling Tool Using A Torsional Magnetostrictive Transducer

Conventional drilling often suffers from high torque demand, poor surface quality, and rapid tool wear. To address these challenges, we designed and fabricated a novel vibration-assisted drilling tool powered by a torsional magnetostrictive transducer. Built from permendur and excited through combined axial and circular magnetic fields, the transducer generates torsional vibrations that are transmitted to the drill bit via a custom wave transmitter and coupling system. Tests on aluminum workpieces demonstrated that torsional vibrations reduced drilling torque by an average of 60%, improved hole size accuracy by 55%, and lowered surface roughness by 25% compared to conventional drilling. By breaking chips more effectively and reducing friction, the tool also enhanced tool life and machining stability. This work shows how magnetostrictive actuation can unlock new efficiencies in vibration-assisted machining.